Making your content LLM friendly doesn’t mean rewriting your site for machines or stuffing it with weird prompt baits.

It’s mostly about clarity, structure, and giving models the kind of signals they naturally gravitate toward, the same things humans appreciate anyway.

In this guide, we’ll break down how to make your pages easier for LLMs to extract, summarize, and recommend, while keeping everything that already works for SEO.

The LLM Friendly trap for SEO KPIs

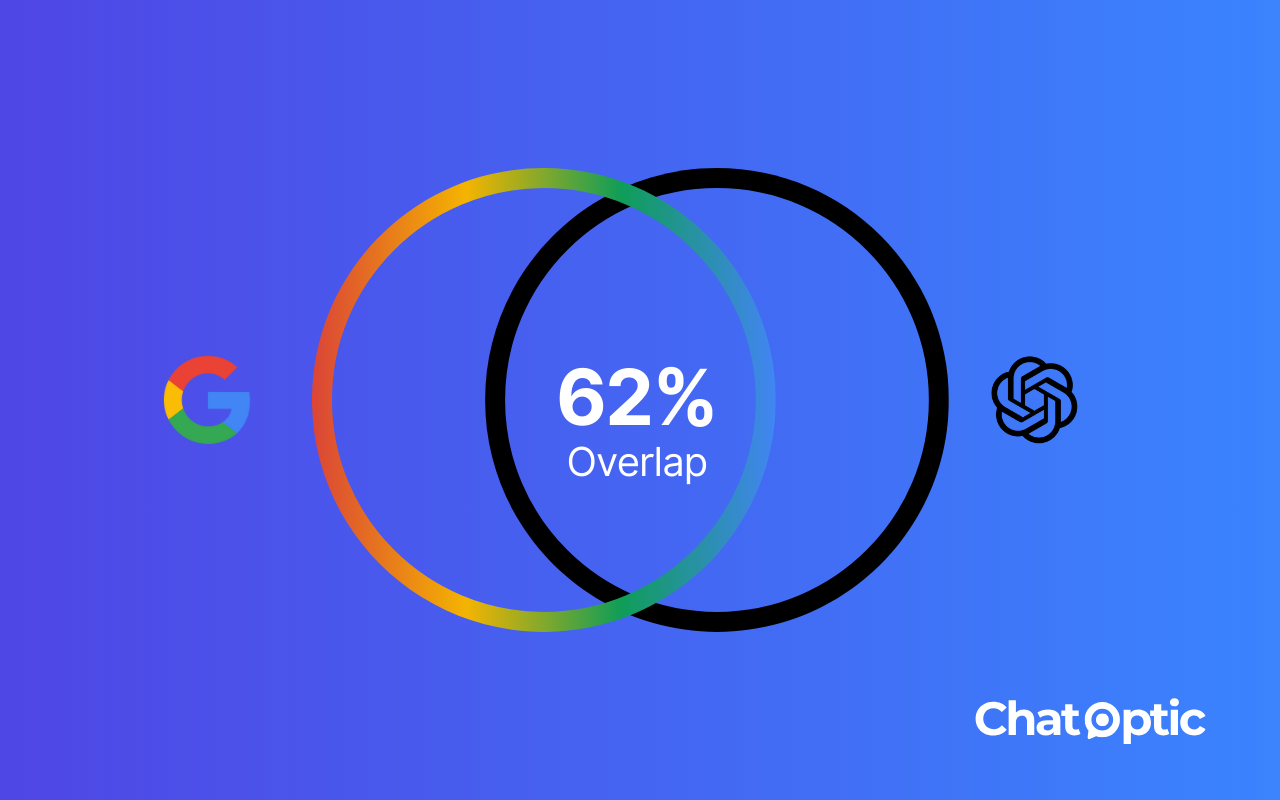

People talk about LLM optimization like it is either the opposite or the same as optimizing for Google. It is not.

Some of the core ideas overlap, things like clarity, focus, clean structure, and strong messaging help both worlds at the same time. But there are a some areas where the rules pull in different directions, and this is exactly where SEO/GEO teams get into trouble.

To show what that looks like, imagine you run a company that sells CRM software.

You have a blog post titled “How to Choose the Best CRM Software for a Small Business” and it is doing pretty well on Google. It ranks top 3, it brings in around 1,000 organic visitors every month, and it is supported by good on page signals and solid links.

Then you start using some of the AI visibility tools that gives you recommendations to improve your visibility in LLMs. You follow the suggestions and suddenly your presence in ChatGPT shoots up. You even see around 100 new visits a month coming from there. Your dashboard looks amazing, everything is green, everything goes up.

But here is the part nobody talks about.

One of those optimizations made the page easier for LLMs to extract, but it quietly broke one of the SEO signals that kept the page strong on Google. For example, rewriting the intro so that the first paragraph fully answers the main question might help an LLM understand your content, but it can also cause users to bounce faster and spend less time on page, which are signals Google relies on for rankings.

So now your AI visibility improved, but your Google ranking dropped to position 9. Organic traffic decreased from 1,000 to around 100 visitors a month. Suddenly the picture changes. You are now getting 100 visitors from Google and 100 from AI chatbots. That is 200 total, which is a lot less than the 1,000 visitors only from Google you had before the “improvement”.

This is the trap many fall into: AI visibility tools usually measure only what happens inside generative engines, they don’t account for the full traffic mix and they don’t warn you when an LLM friendly change hurts your SEO friendly foundations.

So the graphs inside the tool look great, but the KPIs that matter to your business may be slipping under the surface.

And this is exactly why LLM Friendly and SEO Friendly content cannot compete. They need to live together, and every LLM optimization has to be tested in a way that keeps your organic performance safe.

5 Core Principles That Make Content LLM Friendly (and Still SEO Safe)

Here is the part everyone expects to be super technical, but it really is not.

Making your content LLM friendly doesn’t mean hacking your site for machines. It just means giving models a cleaner way to understand what you are saying, while keeping the things Google already loves.

1. Lead with clarity while still giving users a reason to keep reading

LLMs work best when the main idea is clear right from the start. They need an anchor, a simple signal that says, “this page is about X”. Google also likes this because it helps confirm the intent.

But clarity does not mean giving away the entire answer in the first sentence. If you hand the user everything upfront, they have no reason to scroll. Bounce rate goes up, time on page drops, and your SEO suffers.

The goal is simple, answer the core question in a clean, short way, but also hint that deeper value is waiting below.

Before:

“Choosing the right CRM can feel overwhelming. There are so many features, providers, and sales tactics that confuse buyers…”

Storytelling, but the page’s purpose is not clear and models have to guess.

After:

“The best CRM for a small business is one that keeps your team organized, supports simple automation, and connects easily with your tools. In this guide we break down each factor so you can compare options with confidence.”

This answers the question, but also creates a natural pull to keep reading.

Why this is LLM friendly: The model gets the core answer instantly, plus a clear outline of what the rest of the page covers.

Why this does not hurt SEO: You give users value upfront without ending the journey. They still need the deeper breakdown, the comparisons, and the examples, which keeps them scrolling and preserves strong engagement signals.

2. Stick to one intent per page

This is where LLM behavior and SEO overlap almost perfectly.

A page that jumps between too many angles confuses humans, confuses models, and dilutes your rankings.

If your page teaches something, teach it. If it compares, compare. If it explains steps, explain steps, etc…One page, one clear purpose.

Before: A page that jumps between topics like: what is a CRM, top CRM vendors, CRM pricing, CRM for enterprises, CRM for freelancers, CRM integrations. All in one page.

After: A page focused solely on explaining “How to choose the best CRM for a small business.” Everything inside supports that decision.

Why this is LLM friendly: Models don’t have to guess the purpose of the page. Extraction becomes accurate and focused.

Why this does not hurt SEO: In fact, it helps. Clear intent strengthens relevance and ranking stability. You compete for 1 SERP instead of 5.

3. Use structure as a guide, not decoration

LLMs rely on structure to understand the flow. Headings, subheadings, bullets, and short paragraphs help the model break the content into concepts it can extract and reuse.

Google likes that too, because structure signals relevance. But here is the key: structure is a support system, you aren’t “optimizing for the algorithm”, you are making the content easier to navigate for everyone.

Before:

A long, unbroken block like:

“Small businesses choosing a CRM should compare ease of use and integrations because the learning curve can slow the team and pricing varies across vendors and the wrong integrations…”

After: Clear, simple sections:

- Ease of use: one sentence on why it matters.

- Integrations: One sentence on what to check.

- Pricing: One sentence on what affects cost.

Why this is LLM friendly: The model can easily map each idea and reuse it in answers without merging unrelated points.

Why this does not hurt SEO: Strong headings and readable sections improve user signals and relevance. Google loves structured clarity.

4. Add clean, extractable statements

LLMs love lines that feel like answers, short sentences they can lift, rewrite, or reuse as part of a reply.

These are usually simple explanations, clear definitions, concrete criteria, or direct comparisons. Use them naturally and in moderation.

Google likes them too, because they make content easier to read and easier to understand.

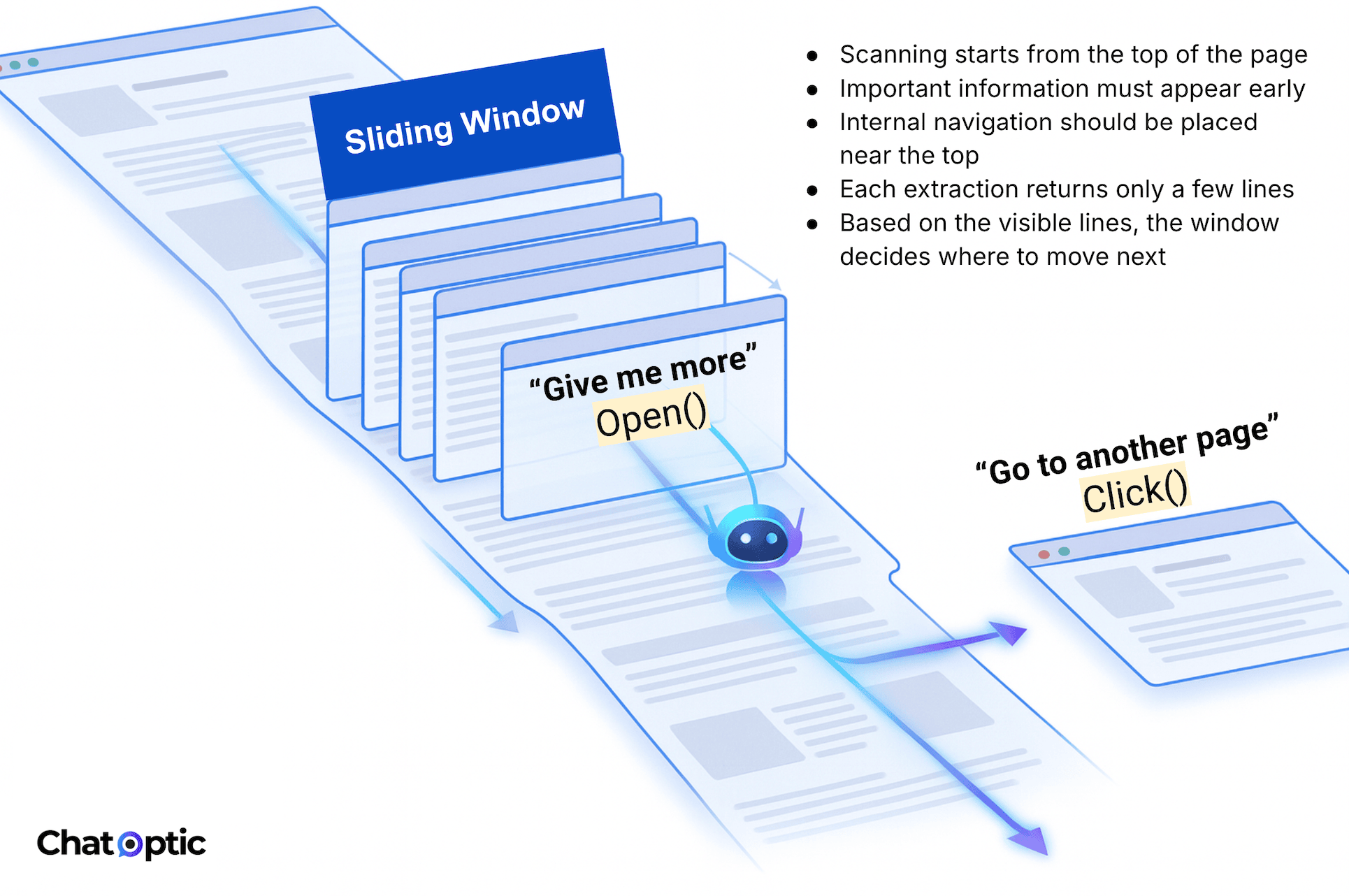

There is also a technical reason this matters.

As Dan Petrovic explains in his great article How GPT Sees the Web, LLMs do not read full pages. They receive small text snippets and then move through a page using a “Sliding Window Attention” (SWA) technique.

Each window shows only a limited slice of content. If an idea is buried inside long paragraphs, the model may never see it. If the idea is expressed as a clean standalone statement, it is far more likely to be captured inside one of those windows and reused.

That is why clarity at the sentence level matters so much.

Before:

“There are many CRM options, and every business should explore what fits its workflow.”

Vague, hard to reuse, easy to skip.

After:

“A small business CRM should include simple automation, fast onboarding, and integrations with tools like Google Workspace and Slack.”

Why this is LLM friendly: It is a self contained statement. Even if the model only sees this line inside a sliding window, it already has clear criteria it can summarize or restate with confidence.

Why this does not hurt SEO: It adds real value for readers, it improves clarity and scannability instead of forcing keywords. Google treats this as helpful content, not manipulation.

Think of these lines as anchor points. They help humans skim, search engines understand, and LLMs extract meaning from partial views of your page.

5. Keep your core SEO signals untouched

This is the part that protects you from the CRM scenario we talked about earlier.

When optimizing for LLMs, never touch your:

- Core Web Vitals:

- Loading Speed (LCP): Changes in content formatting or the addition of scripts (even those supporting LLM workflows) must not slow down the load time of the largest content element on the page.

- Visual Stability (CLS): When adding summary boxes, tables, or reorganizing sections for LLM clarity, make sure nothing shifts during load. Unexpected layout jumps damage your CLS score.

- Title and Meta Description tags: These are the biggest drivers of your organic CTR. Keep titles user focused and compelling. Do not rewrite them just to “signal” something to an LLM. A drop in CTR might push your Google rankings down, no matter how good your AI visibility looks.

- Canonical URLs: Every LLM friendly update must preserve the correct canonical tag. Breaking canonicals or mispointing them can create duplication issues that harm rankings fast.

- URL Slug: Keep the original URL slug intact. It holds ranking history, link equity, and relevance signals. Changing the slug for clarity or “LLM friendliness” forces Google to reindex the page and treat it like new, which usually results in a ranking drop.

- Internal Link Structure: Internal links show Google how your site is organized. Do not remove or thin out important links just because their lower importance for LLMs.

- Ranking Keywords: These are the terms that bring you organic traffic and define your page’s topical relevance. When rewriting for LLM clarity, do not dilute your primary keywords or shift the core focus of the page. Even small changes can weaken your rankings.

What NOT TO DO and Why It Can Hurt Your SEO

Most LLM friendly mistakes come from trying too hard. Teams hear “optimize for AI” and start breaking fundamental SEO signals that should stay untouched. The key traps revolve around sacrificing User Experience (UX) and E-E-A-T signals (Expertise, Experience, Authoritativeness, Trustworthiness) for the sake of machine efficiency.

Here are the critical traps to avoid:

1. The Fluff Trap: Adding AI-Specific Metadata or Language

LLMs are sophisticated, they do not need explicit machine instructions embedded in your content.

Bad Move:

Injecting text without value like:

“This content has been parsed for high-confidence AI extraction.”

Why it Hurts SEO:

- Weakens clarity and trust signals: Google sees this as self-referential “fluff”. It breaks the natural flow and dilutes the perceived quality of the expertise.

- Increases bounce risk: Users landing on the page may find the text confusing or unnecessary, increasing the likelihood of an early bounce, a direct negative time on page signal.

What to Do Instead:

Focus on clean, natural language: LLMs respond to semantic clarity and structure, use strong internal headings and definitive first sentences.

2. The Thin Content Trap: Drastically Shortening Proven Content

The goal of LLM optimization is clarity not shortness, cutting depth is the fastest way to lose Google rankings.

Bad Move: Taking a high performing 2,500 word guide (which ranks well because of its comprehensive coverage) and trimming it down to 800 words because the AI visibility tool suggested it’s “compact information”.

Why it Hurts SEO:

- Damages topical authority: The page loses its comprehensive coverage, often removing niche or secondary ranking keywords that were crucial for ranking for the full spectrum of user intent.

- Fails full user intent: Google rewards pages that cover a topic exhaustively. Reducing length creates “Thin Content” that no longer satisfies complex queries, causing rankings to drop.

What to Do Instead: Maintain your content depth by breaking it into smaller, logically structured blocks using H3/H4 tags.

3. The UX Decay Trap: Prioritizing Extraction Over Retention

This trap involves making the content too easy to extract, inadvertently destroying the signals Google uses to measure user satisfaction.

Bad Move: replacing context and narrative with a fully resolved, definitive answer at the very top of the page, leaving no reason for the reader to continue.

Why it Hurts SEO:

- Short time on page: By providing the full, definitive answer instantly, you maximize the chance a user will get what they need and immediately return to the SERP. This signals dissatisfaction to Google, causing the page to slide down in rankings.

- Loss of Experience Signal: Removing personal anecdotes or case studies, the Experience (E) in E-E-A-T, makes the content generic and less trustworthy.

What to Do Instead: use a short TLDR to frame the answer, but keep the full reasoning, experience, and examples inside the body. Let the LLM extract clarity without removing the human reason to stay.

4. The Context Removal Trap: Deleting Deep Supportive Elements

LLMs are extracting information, but humans (and Google’s algorithms) are evaluating expertise.

Bad Move: removing detailed expert citations, comparative analysis sections, or specific user case scenarios because the LLM only needs the conclusion.

Why it Hurts SEO:

- Reduces Authority: the deep, supportive text is often what establishes your Authoritativeness and Trustworthiness (A and T in E-E-A-T). Removing it renders the remaining content surface-level.

- Breaks Internal Context: Detailed paragraphs often contain the anchor text and surrounding context necessary to give full power to your Internal Linking structure. Removing this context weakens the entire site’s link equity.

What to Do Instead: keep your depth and supporting evidence. LLMs simply ignore the parts they don’t need for a summary, but those parts are vital for your ranking.

Use Chatoptic Persona Writer to Create LLM-Friendly Content for Personas

Anyone can write content that is LLM friendly without hurting SEO.

You do not need to abandon long form writing, storytelling, or depth. You just need to be more intentional about clarity, structure, and how ideas are expressed at the sentence level. When content is written for real people first, and organized in a way models can extract meaning from partial views, SEO and GEO naturally align.

That said, doing this consistently takes time.

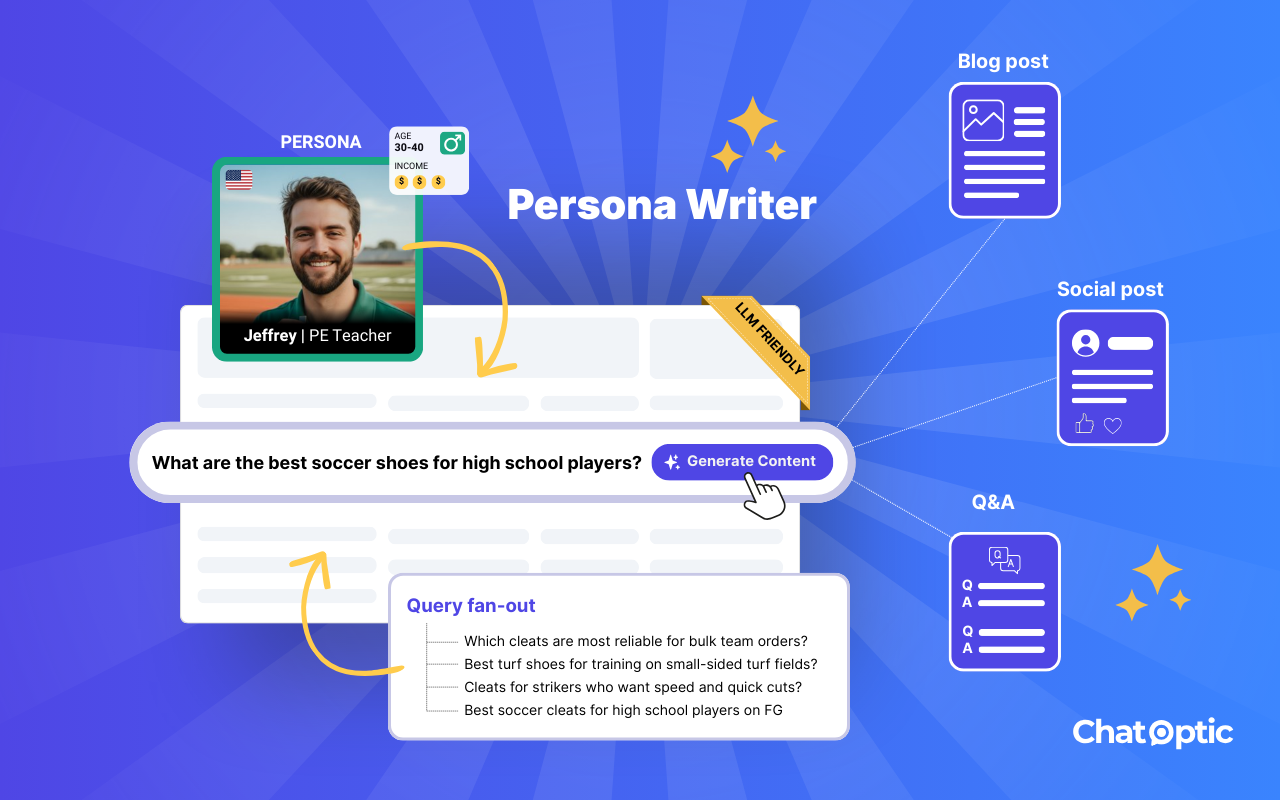

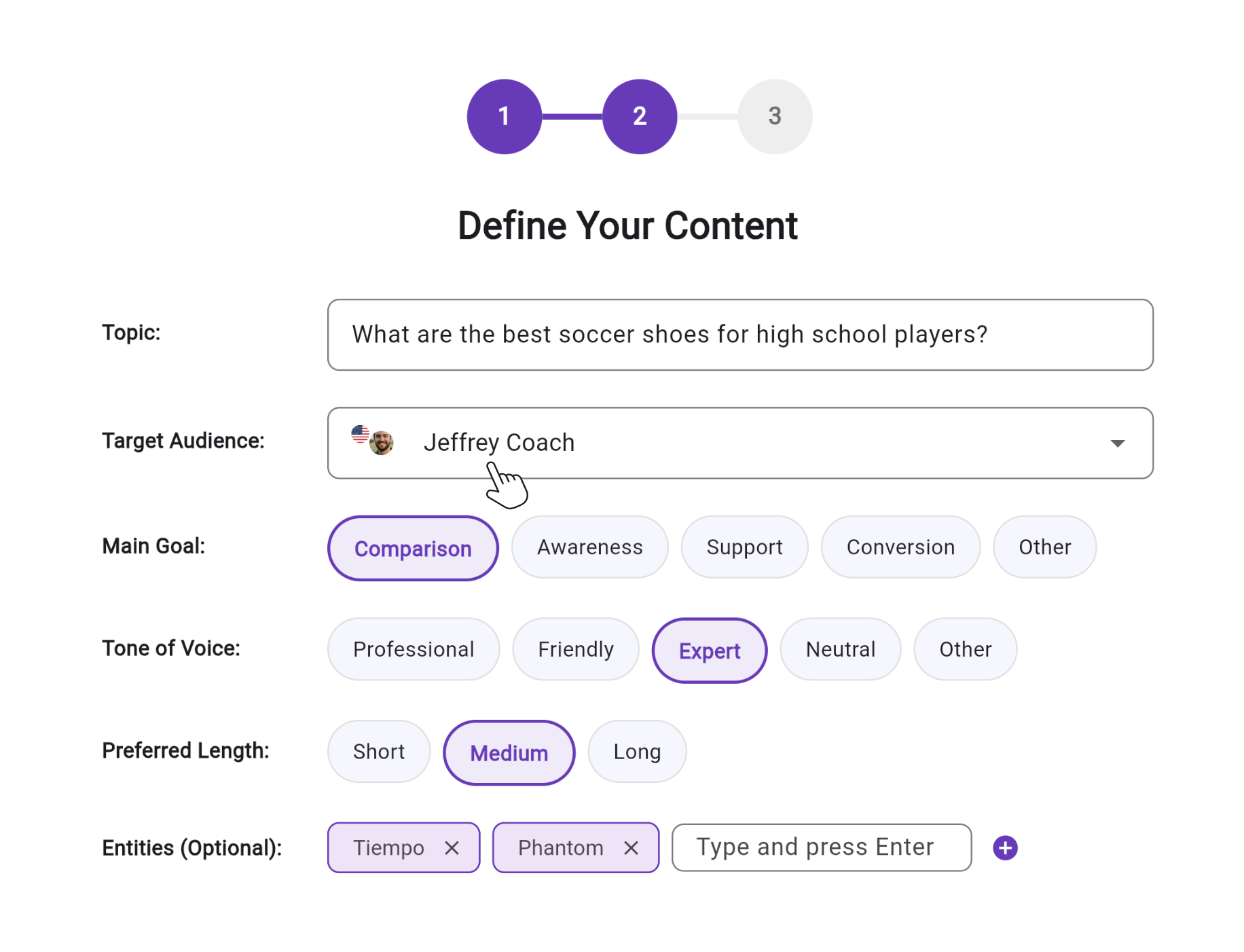

If you want to move faster, tailor content to specific personas, and understand where your brand does not appear in AI answers today, this is where our Persona Writer comes in.

Instead of guessing how different audiences phrase questions or what angle an LLM prefers, Chatoptic Persona Writer works in three steps:

- It simulates how specific personas ask questions in AI models, based on role, demographics, intent, and context.

- It analyzes which brands and sources appear in those answers and which ones do not.

- It helps you generate content written specifically for those personas, structured in a way LLMs can easily extract, without sacrificing SEO best practices.

The platform generates focused, audience-driven content that improves AI visibility where it actually matters: in the answers your real customers are already reading.