Marketing measurement used to be pretty straightforward: you tracked traffic, you tracked conversions, and you improved what moved the needle.

But in 2025, discovery is shifting fast.

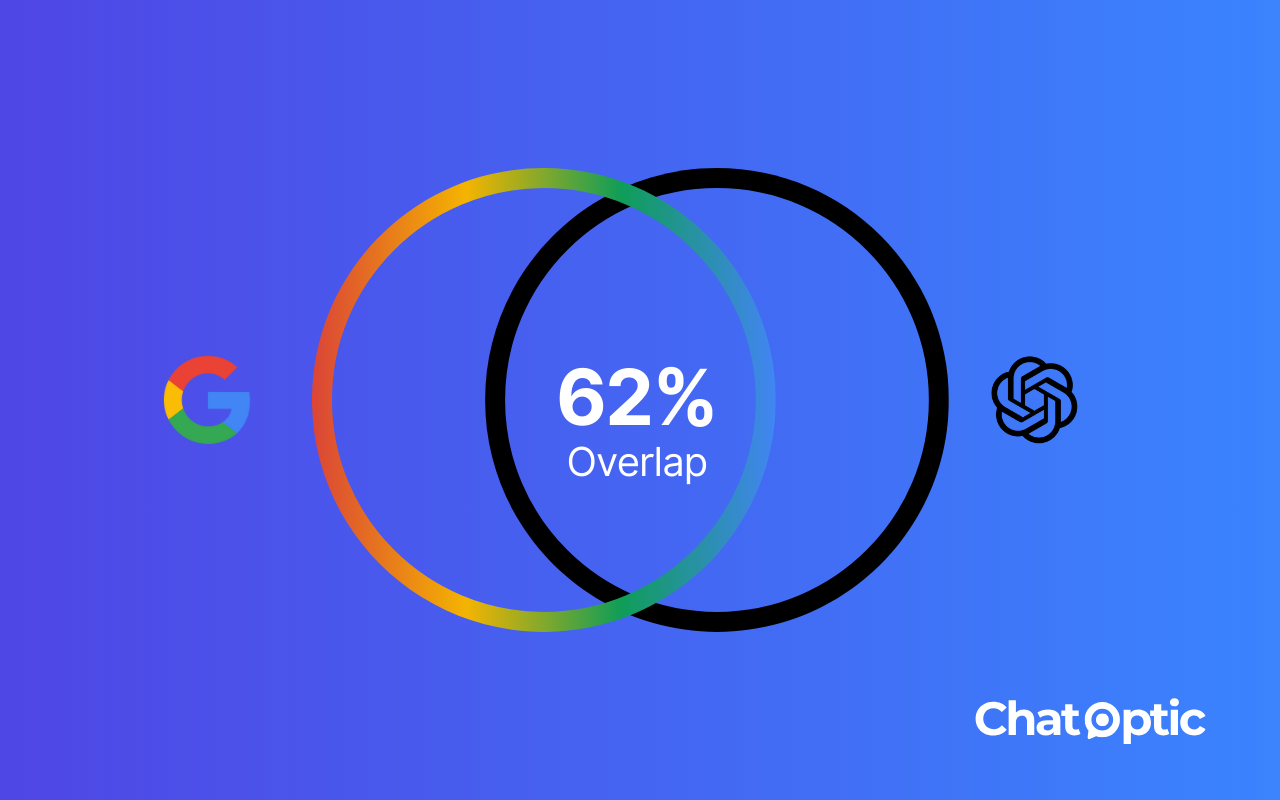

ChatGPT alone is already used at massive scale, and more and more “search moments” happen inside conversational AI. At the same time, traditional search keeps moving toward a zero click experience. According to SparkToro, about 58.5% of Google searches in the US end without a click, meaning the user gets what they need without ever visiting a website.

So even if your SEO is strong, it’s becoming less safe to rely on “organic clicks” as your main discovery engine.

And this is not a small trend. Bloomberg Intelligence estimates the generative AI market could reach $1.3 trillion by 2032, which tells you where budgets, products, and user behavior are heading.

That’s why CMOs are now asking a new question: If AI is giving the answer, where does my brand show up inside that answer?

Why AI visibility KPIs became confusing

Once brands realized that AI answers matter, measurement rushed in.

Very fast, the market filled up with new KPIs:

- AI share of voice

- Prompt coverage

- Answer quality

- Sentiment

- Hallucination rate

- Zero click influence

Each one sounds reasonable, some of them are genuinely useful, but together, they create a problem.

CMOs are suddenly looking at dashboards that feel familiar and unfamiliar at the same time. Familiar, because it looks like SEO all over again. Unfamiliar, because the units don’t behave the same way.

In classic search, most metrics were tied to a clear outcome: traffic.

In AI driven discovery, there is no click in most cases. There is no session, no bounce rate, no conversion path you can follow end to end.

So teams start measuring everything they can measure, hoping clarity will emerge from volume.

It rarely does.

The result is that many brands know they are “doing GEO”, but struggle to answer one simple question: Is our visibility in AI actually getting better in a way that impacts demand?

To answer that, we need to zoom out before we zoom in.

And the best way to do that is to borrow a lesson we already learned the hard way in SEO.

A quick reality check from SEO

If this feels familiar, that’s because it is.

In SEO, we also went through a phase where measurement got messy.

We tracked everything:

- SERPs CTR

- Bounce rate

- Time on site

- Pages per session

- Rankings

All valid metrics, all useful in the right context.

But when CMOs had to understand real SEO success in one sentence, it usually came down to this: Are we seeing an increase over time in organic traffic from non-branded keywords in our category?

Everything else helped explain why the answer was yes or no:

- Good CTR helped explain strong meta titles and descriptions.

- High bounce rate explained weak intent match.

- Top rankings explained solid on page and off page optimization.

But none of those were the goal by themselves, they were supporting metrics.

Before we break AI visibility into sub metrics, we need to agree on the one thing we actually want to measure.

And to do that, we need to understand how visibility really works inside LLMs.

How AI Visibility Really Works

In classic Google search, relevance is evaluated at the query-page level. Pages are ranked based on how well they match a searched phrase, along with signals like authority, links, and content quality. While some personalization exists, relevance is largely consistent across users.

Generative engines work differently. They do not rank pages. They generate answers based on the query, the inferred intent, and the context available within current/past interactions.

Because of this, the same intent can surface different brands depending on how the question is phrased and on signals accumulated during the conversation, such as prior questions or implied constraints.

In a generative environment, visibility is no longer about position. It is about whether a brand is included at all, and how it is framed within a synthesized answer.

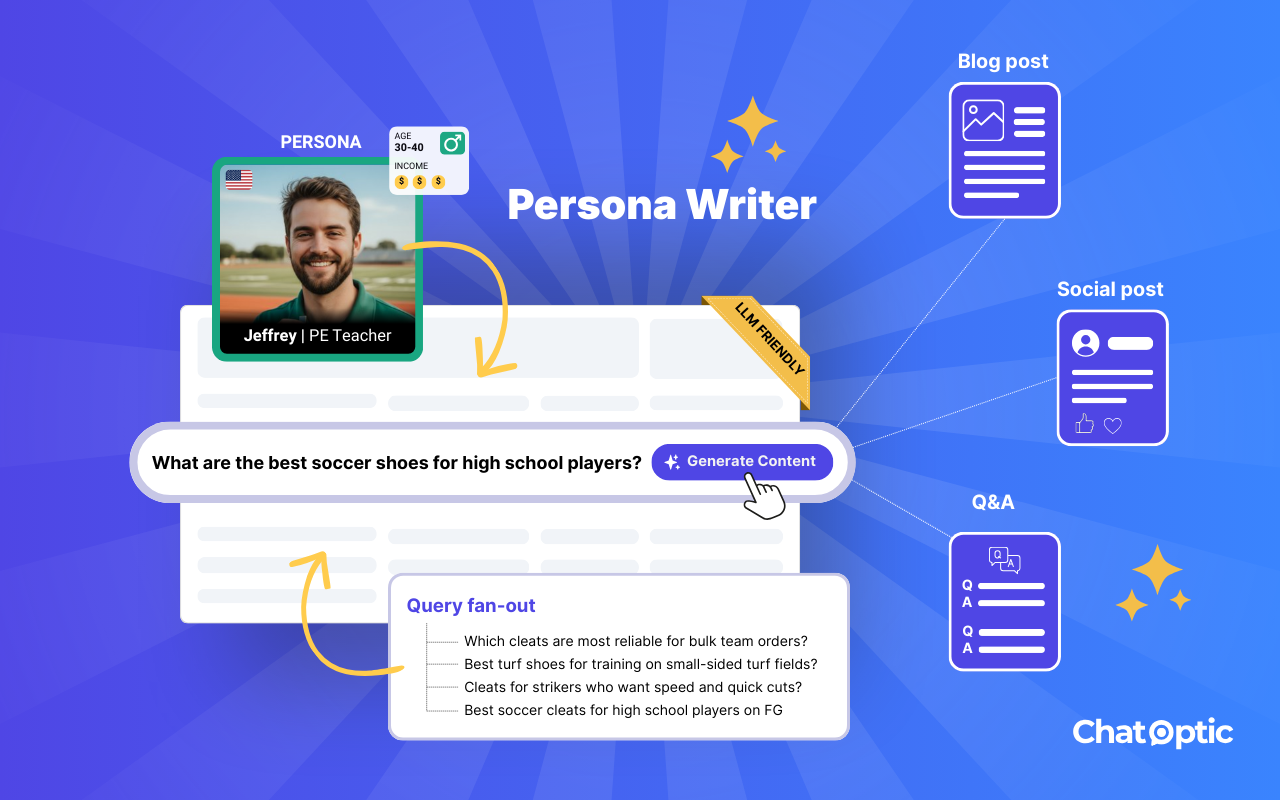

This is why Chatoptic measures AI visibility through what we call LLM Persona Intelligence. Instead of relying on generic brand appearances across prompts, all measurements are based on persona-driven prompts that reflect how different real buyers naturally ask questions. Generative engines don’t really answer in a neutral or anonymous way, so this approach better reflects how answers are actually formed in real situations.

The Main KPI: AVS (AI Visibility Score)

What it measures

AI Visibility Score measures one thing: how often your brand shows up in AI generated answers for category relevant prompts, from the perspective of your real buyer personas.

When I say “shows up,” I mean any case where your brand appears in the answer as one of the options. It can be mentioned, recommended, or included as one of the relevant options in a list. It doesn’t really matter how. What matters is that your brand was there at the moment your target audience asked something related to what you offer.

Why it matters

In Google, ranking #3 can still drive meaningful traffic.

According to this study, only 0.44% of Users Visit Google’s Second Page Results. So the real goal in SEO was always simple: make it to page one. After that, you optimize to move up.

AI answers work in a similar way, but the “page” looks different.

An AI generated answer is basically the equivalent of page one in Google.

Most of the time, the model gives one direct recommendation, or a short, tightly framed shortlist, So the first goal is to be there.

If your brand does not appear in the answer at all, you miss the chance to be part of the user’s decision process. That exposure simply doesn’t happen.

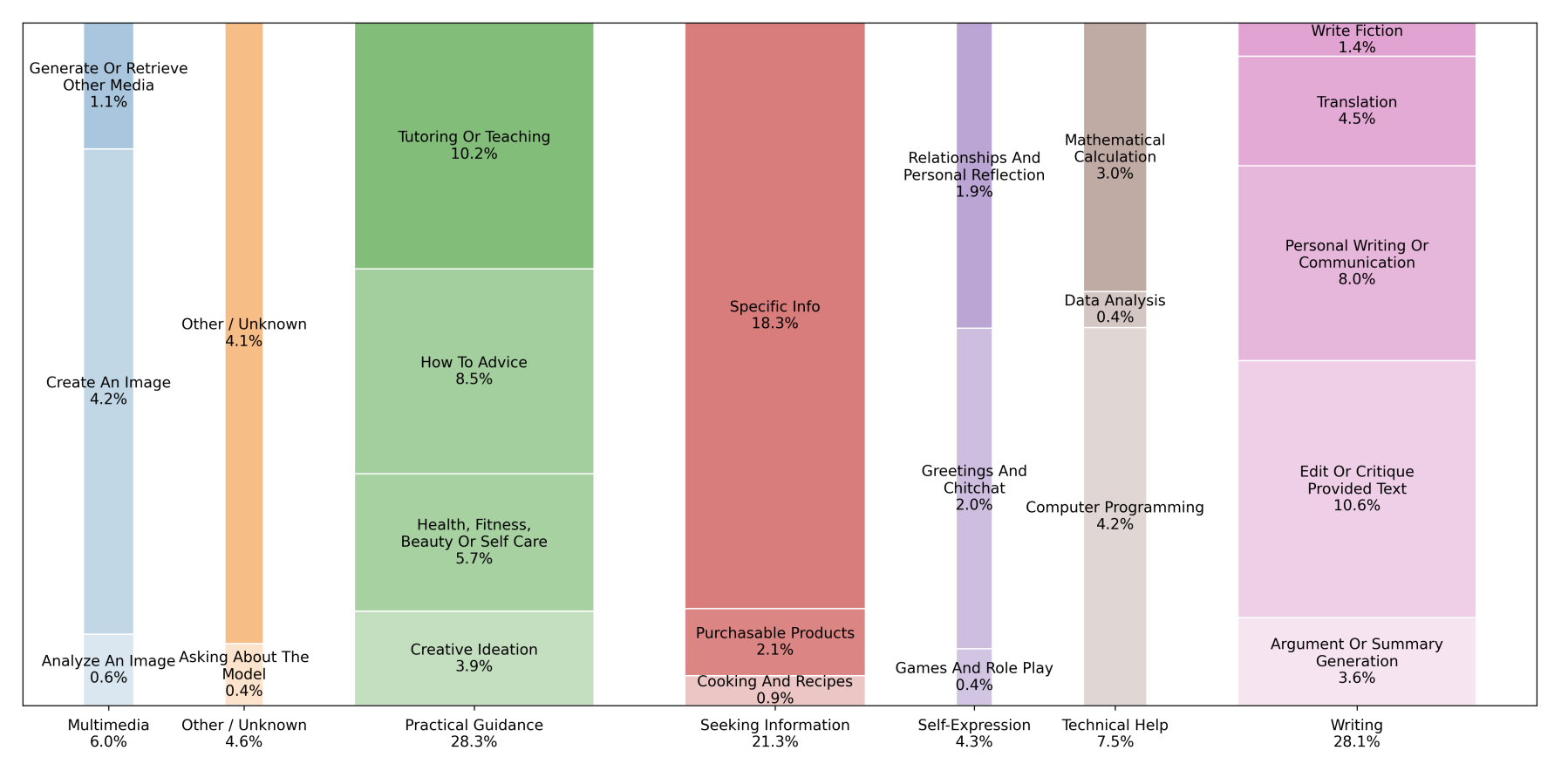

And this becomes especially critical when the intent is commercial. When someone is asking with clear buying intent, not showing up in the answer means you are invisible at the exact moment a decision is being formed. In September 2025, OpenAI released a research paper called “How People Use ChatGPT”. One of the top three use cases identified was seeking information, accounting for 24% of usage. It is also the fastest growing category, rising from 14% in July 2024 to 24% in July 2025.

Of course, once you do appear, you want to show up as high as possible, be framed clearly, in high sentiment and ideally be the only brand mentioned. But all of that comes after the most basic requirement: inclusion.

This is exactly what AI Visibility Score captures: whether you are actually part of the answer when the model responds to a specific persona with a specific intent.

Measured through persona based prompts, AI Visibility Score becomes the clearest top of funnel KPI for GEO.

How to measure it in practice

You can measure AI Visibility Score in Chatoptic using a simple workflow.

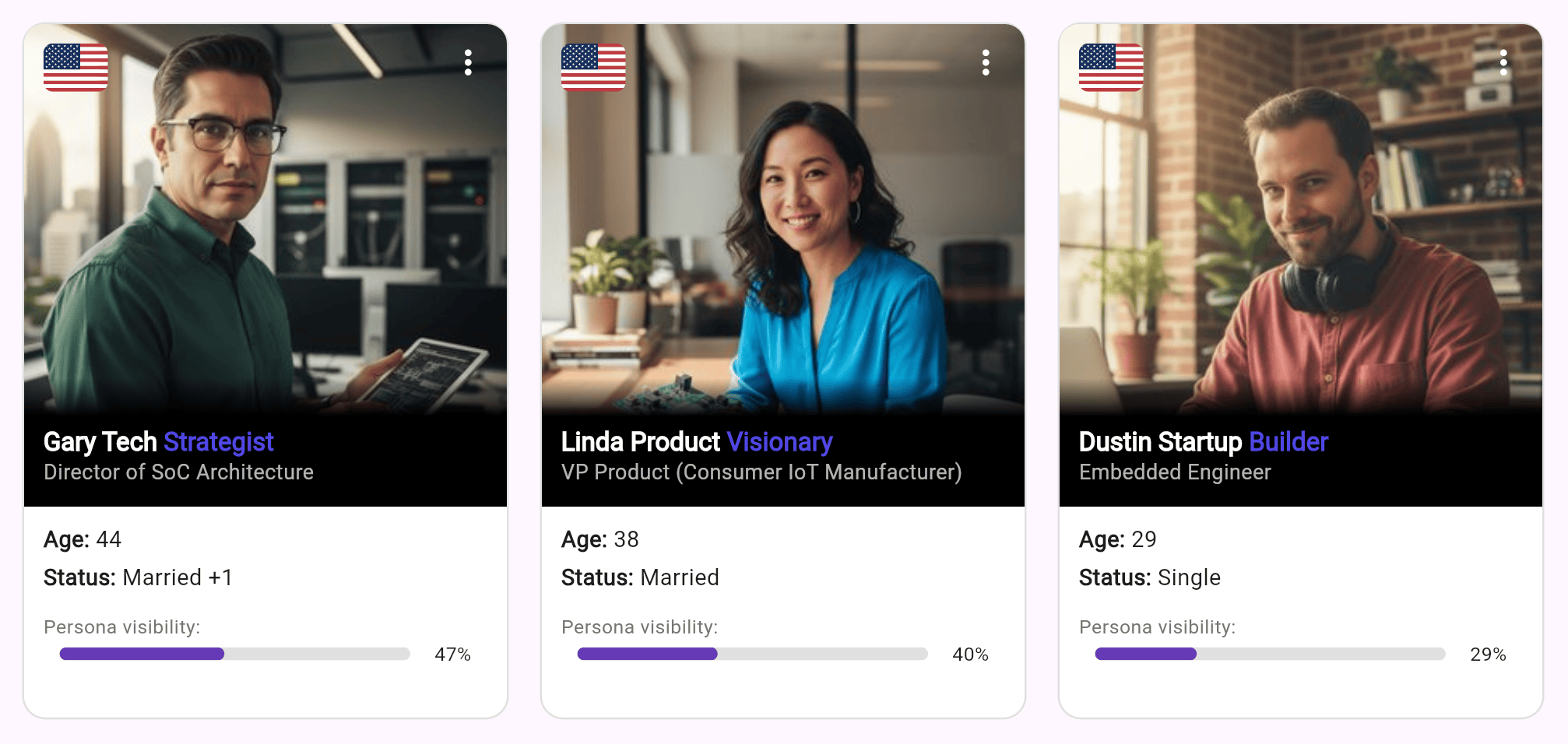

1. Define personas

Start with real buyer types, for each persona, define the basics:

- Role and context

- Goals and motivations

- Constraints and pain points

- What they care about when comparing options

- Buying habits

Each persona reflects a real way people in your category ask questions. And don’t worry if you’re not sure how to define them. Our AI can suggest personas with a high likelihood of being real potential customers in your category. You can accept them, tweak them, or reject them until you end up with a solid set of representative personas.

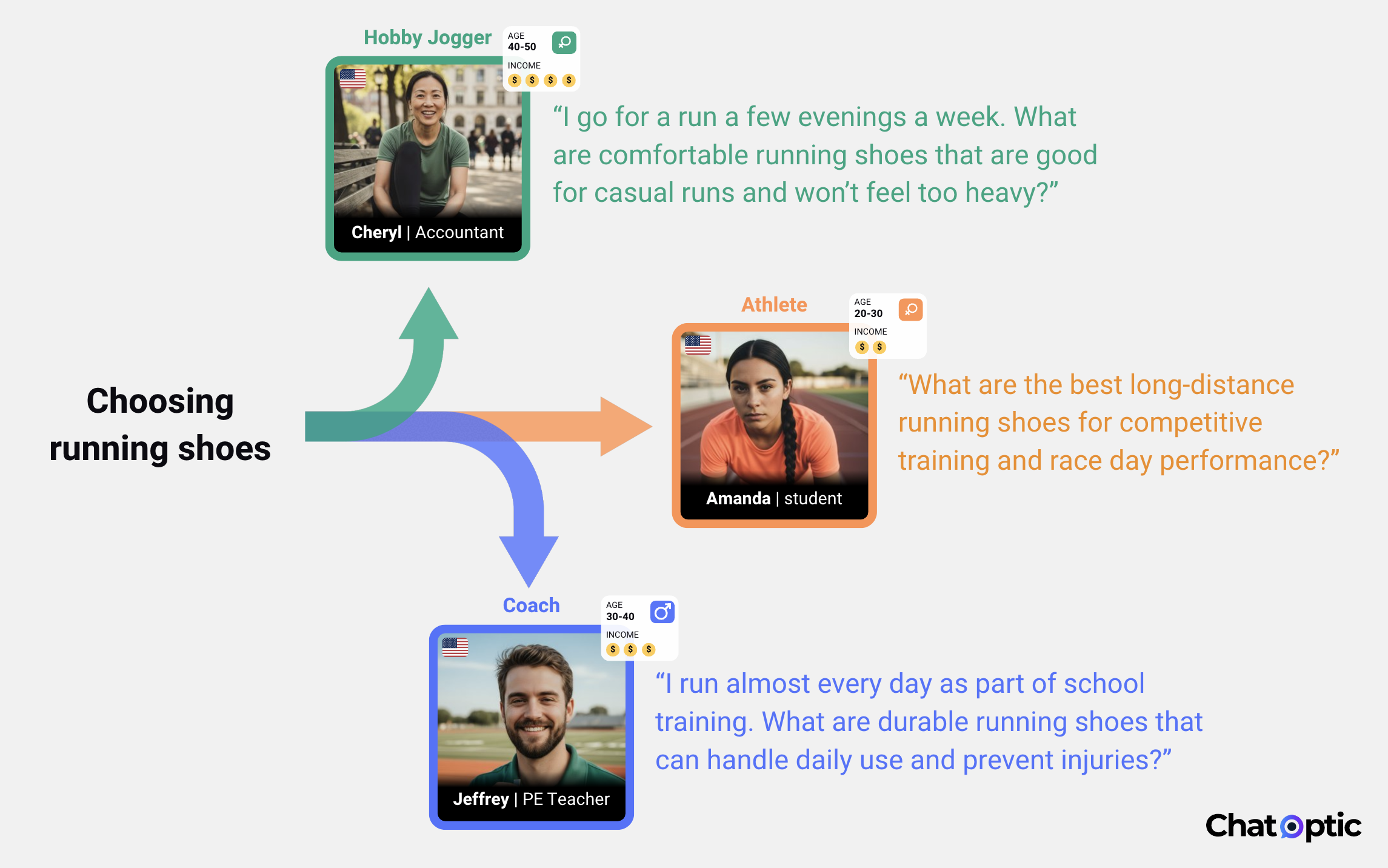

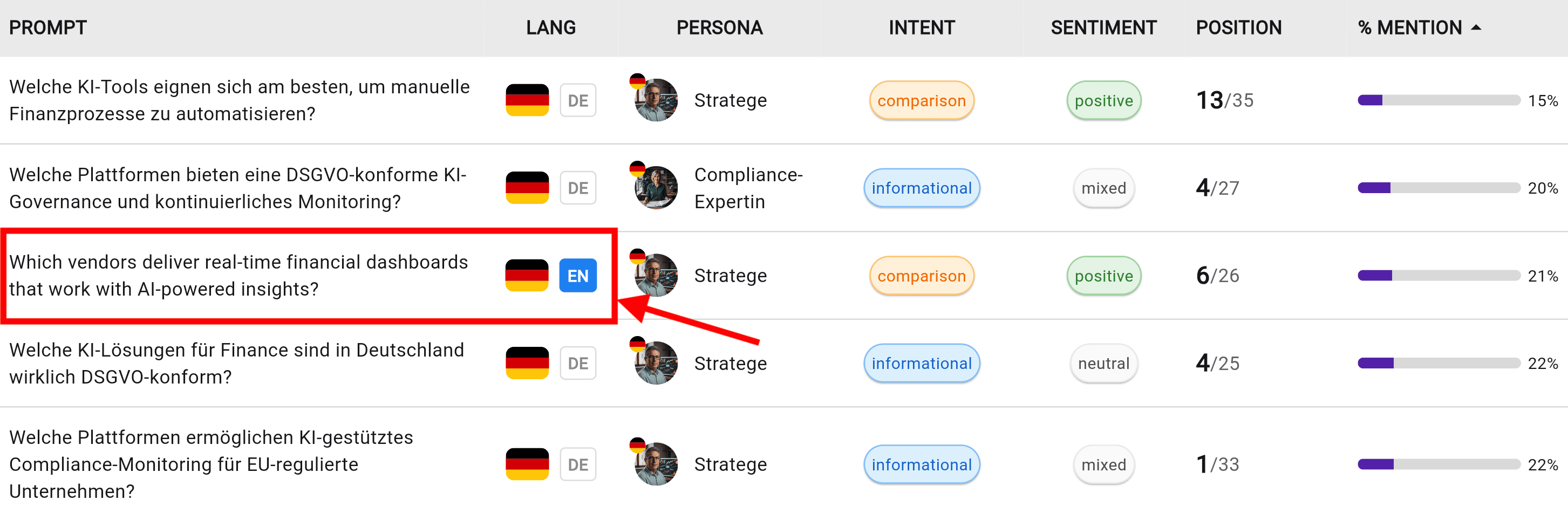

2. Map one intent into multiple persona prompts

The same intent is phrased differently by different people.

Let’s take an example: choosing running shoes

- Persona 1: Professional runner

“What are the best long distance running shoes for competitive training and race day performance?” - Persona 2: High school PE teacher

“I run almost every day as part of school training. What are durable running shoes that can handle daily use and help prevent injuries?” - Persona 3: Recreational runner and parent

“I run a few evenings a week. What are comfortable running shoes for casual runs that don’t feel heavy?”

All three share the same intent, but they use different wording and have different needs. As a result, they often get different AI answers. Sometimes the same brands overlap, and sometimes they don’t.

That’s why you shouldn’t measure visibility using one generic prompt. You measure it across persona variations of the same intent.

3) Expand one intent into multiple intents and query fan outs

Once you’ve defined an intent and tested it through different personas, the next step is to zoom out.

Real buyers don’t ask just one question, they explore.

They move between related intents, features, use cases, comparisons, and constraints. This is where query fan out comes into play.

Start with one core intent, then expand it into additional, closely related prompts based on:

- Your products or services

- Sub categories within your offering

- Common follow up questions buyers ask

- Commercial and evaluative angles

Each of these prompts should still be tested through different personas, because the way a CFO asks about integrations is very different from how a technical buyer or a manager does.

By doing this, you move from testing a single question to building a generative visibility map.

Instead “do we show up for this prompt”, we are asking “where do we show up across the full set of intents that matter in our category”?

This is how you start seeing patterns instead of isolated results.

From a single score to a full generative visibility map

Once you have this broader intent and prompt coverage, you can slice the same AI Visibility Score in different ways, without changing the KPI itself.

For example:

1. Persona AVS (AI Visibility Score)

How often does my brand appear when prompts are asked from the perspective of a specific persona? This helps you understand which buyer types you dominate and where you are invisible.

2. Offering AVS

If you group all prompts related to a specific product, feature, or service, you can see how visible your brand is in that area overall, across all personas. This is especially useful for multi product companies.

3. LLM AVS

You can also break this down by model, for example focusing more on ChatGPT or Gemini if one of them is more important for your market.

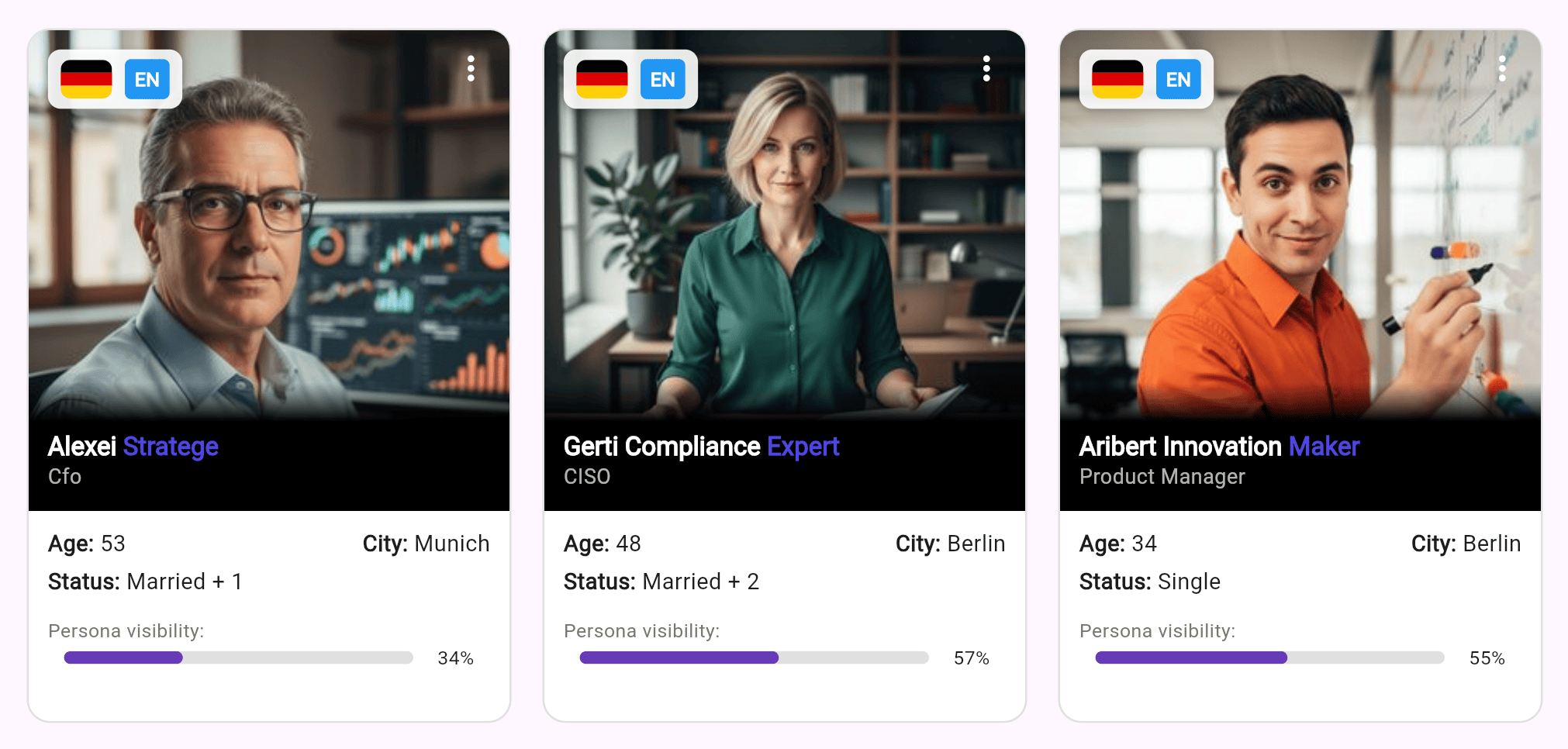

4. Market AVS

You can also look at generative visibility by market. In this view, personas are grouped by geography and language, so you’re effectively measuring how visible your brand is within a specific market.

Prompts are written in the local language and asked from that geographic context, using personas that reflect local culture and buying behavior.

This creates a realistic view of how your brand shows up in that market, based on the combined visibility of all relevant personas in that region.

Since you may not always be fluent in the local language of every market, the platform allows you to translate prompts into English (or any other language you choose), so you can validate that they are thematically accurate and aligned with the intent being tested.

Think of it as an “aggregated persona view” per market, where you see how your brand performs across the full set of local intents and prompts, not just a single persona.

All of these views are different cuts of the same metric, the KPI stays the same.

And that’s what turns AI Visibility Score from a single number into a practical decision making tool for GEO.

5. AVS over time

AI answers change constantly, that’s why AVS should also be viewed over time, for each one of the dimensions above.

You can track AI visibility trends per persona, per category, per market, or per model, and see where your AI generative presence is improving, declining, or staying flat.

This turns AI visibility from a static snapshot into a trend you can actually manage.

The bottom line: one metric to rule the noise

Every new customer acquisition channel comes with new metrics, GEO is no different.

But when everything is measured, focus becomes the real challenge.

This article made one simple argument: in AI driven discovery, there is one KPI that best represents success. Not because the others don’t matter, but because they all roll up into it.

LLM Visibility Score tells you whether your brand is present when real people ask AI about your category. If you have that, you can optimize the rest. If you don’t, nothing else matters.